Research

Professor Matthias Scheutz

Lab: The Human-Robot Interaction Laboratory

The Human-Robot Interaction Lab (HRILab) at Tufts University aims to enable natural interactions with humans and robots. That includes being able to instruct robots in natural language and having task-based dialogues with robots about their performance and their decisions. Robots, in turns, should be able to learn on the fly from humans if they are missing knowledge and should be able to explain and justify their behavior to humans, with recourse to norms and ethical principles. The HRILab is currently pursuing research in fast interactive task learning, robot performance self-assessment, and mechanisms for robot resilience, all in the context of human-machine teaming and generally open-world HRI.

Professor Jivko Sinpov

Research: Transfer Learning of Sensorimotor Knowledge for Embodied Agents

Prior work has shown that learning models of objects can allow robots to perceive object properties that may be undetectable using visual input alone. However, learning such models is costly as it requires extensive physical exploration of the robot's environment and furthermore, such models are specific to each individual robot that learns them and cannot directly be used by other, morphologically different robots that use different actions and sensors. To address these limitations, our current work focuses on enabling robots to share sensorimotor knowledge with other robots as to speed up learning. We have proposed two main approaches: 1) An encoder-decoder approach which attempts to map sensory data from one robot to another, and 2) a kernel manifold learning framework which takes sensorimotor observations from multiple robots and embeds them in a shared space, to be used by all robots for perception and manipulation tasks.

Professor Elaine Short

Lab: The Assistive Agent Behavior and Learning Lab

The goal of the AABL lab is to make robots more helpful. Using insights from focus groups, user studies, and participatory research, we design new algorithms for human-robot interaction (HRI) and evaluate them in the real-life situations where robots could be most useful.

We work at the intersection of assistive technology and social robotics (including socially assistive robotics): we are especially interested in robots interacting with users under-served by typical HRI research, such as children, older adults, and people with disabilities.

Ultimately, our work gives robots the intelligence to understand what people want, make good decisions about how to help, and to help appropriately in real-world interactions.

Professor Usman Khan

Research: Data and network science, systems and control, and optimization theory and algorithms with applications in autonomous multi-agent systems, Internet of Mobile Things (IoMTs), fleets of driverless vehicles, and smart-and-connected cities

Related articles:

Futuristic Inspections for Bridge Safety, Tufts Now

SPARTN Lab bridges theories, practice with quadcopter drones, Tufts Daily

Featured Profile, Jumbo Magazine

Videos:

Signal Processing and Robotic Networks (SPARTN)

Camera-based navigation, control, and 3D-scene reconstruction

Unmanned camera-based navigation–remote monitoring

Professor JP de Ruiter

Research: Cognitive foundations of human communication

Related article:

James the Robot Bartender Serves Drinks in Binary, PC Magazine

Lecture:

Social robotics lecture

Professor Chris Rogers

Research: Center for Engineering Education and Outreach (CEEO)

A number of faculty at the Center for Engineering Education and Outreach study the interaction between robots and children. We look at what sort of learning results from different ways of playing with robots, how different prompts can elicit different different skills in the students and how designing, building, and coding robots can provide motivation for learning more general engineering skills. Our current research areas include identifying the different learning catalyzed by following instructions as opposed to providing open-ended problems in robotics, how we can demystify AI for K-12 students, alternate ways of teaching computational thinking skills, multiple methods of supporting online robotic collaboration and learning, and developing very low-cost robotic platforms for school partners in other parts of the world. Much of our work takes a more playful approach to learning, promoting failure and iteration, finding personally relevant projects, and valuing and responding to the ideas and thinking of the individual student. Our work is funded by the NSF, LEGO Education, the LEGO Foundation, PTC, and National Instruments.

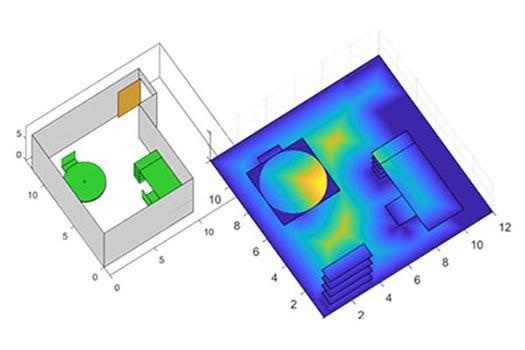

Professor Jason Rife

Research: Modeling human perception for search and rescue operations. Fusing model and sensor data to track group movements during emergency response. Sample paper: "Who goes there? Using an agent-based simulation for tracking population movement"