Advancing values-driven AI and human-robot interaction

By Kiely Quinn

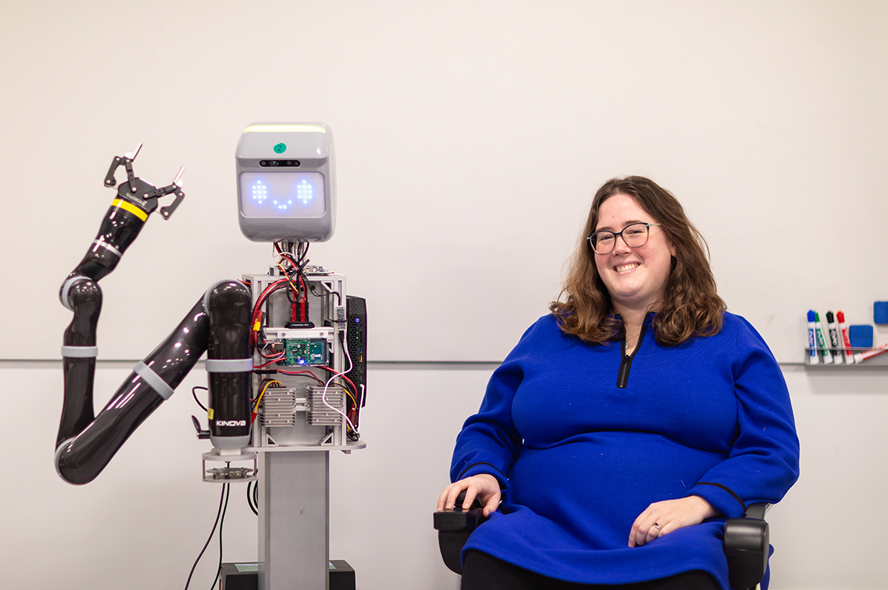

As artificial intelligence and robotics continue to advance and become more integrated with society, Clare Boothe Luce Assistant Professor Elaine Schaertl Short and other researchers at Tufts are working to ensure that interactions between humans and these technologies remain ethical and collaborative. Through her work as principal investigator of the Assistive Agent Behavior and Learning Lab (ABBL) in the Department of Computer Science, Short uses a values-driven approach to shift narratives of disability and technology and in doing so improve the quality of AI and robotics for everyone. Her work has long focused on people who are underserved by technology research, including children, older adults, and people with disabilities. Short earned her PhD in Computer Science from the University of Southern California and joined Tufts in 2019.

Fostering successful relationships between humans and robots can be challenging outside of a controlled setting, given that most real-world interactions are highly situational and constantly changing. Humans can fluidly adapt to changes as they arise, but robots do not intrinsically have this ability. Even something as simple as moving around in a crowd of people can be a challenge for a robot. Poor human-robot interaction erodes trust in robots’ usefulness and can lead to distress, frustration, or further consequences for the human user if the robot doesn’t respond as expected.

Disability community values in robotics

As a disabled faculty member, Short draws on disability community values, such as interdependence, to improve the quality of human-robot interaction and AI for all users. In an interdependent relationship, both parties are dependent on one another, instead of one party relying fully on the other. Her perspective challenges some of the dominant narratives in robotics. For example, instead of striving for robots to be completely autonomous, Short aims to improve their ability to work with humans to solve problems together. According to Short, a key part of this interdependent relationship between humans and robots lies in the human maintaining some level of control over the robot’s behavior when it is providing assistance, a value which also stems from the disability community.

Historically, society has used a medical model of disability and has treated disabled people as problems to be fixed, with little input from disabled people themselves. The relationship between humans and robots, particularly for disabled users, can fall into the same pattern of the robot providing a service to a passive user. While improving quality of life for people with disabilities is often cited as a benefit of robotic innovations, disabled people are critically underrepresented in engineering fields, and their involvement in designing these technologies is often minimal.

By taking a disability-centered perspective on robotics and supporting disabled people in developing their own engineering skills, Short wants to create a more balanced human-robot relationship that empowers users to have control over the actions and outcomes of the robot’s assistance. To that end, Short develops robots that can learn with feedback from people in real-world situations and adapt to partial user control of the robot’s behavior. Her lab works at the intersection of assistive technology, AI, and social robotics, with the goal of making robots smarter, more helpful, and more inclusive.

Expanding robotic capabilities

Many robotic innovations geared toward disabled people focus on pragmatic activities of daily living, such as getting dressed, brushing teeth, or preparing meals. Although these technologies are important, Short’s work also fulfills an often-overlooked area of life for disabled people. Her work emphasizes creative or entertainment-based tasks – such as painting or cake decorating – that improve the quality of life for users by offering opportunities for creativity and joy. “I want to push back against the limited vision of disability that engineers tend to have,” she says. As a result, her work acknowledges disabled users as multifaceted people with autonomy, preferences, and style.

In a recent study, Short and a team of Tufts researchers programmed a robot to perform a painting task. The robot took care of the technical aspects, but users were given control over the style of the painting. By maintaining partial control over the final results, users were able to express themselves creatively with the robot. The research from this study will be presented along with two other recent papers from Short’s lab at the upcoming Association for Computing Machinery/Institute of Electrical and Electronics Engineers Conference on Human Robot Interaction in Boulder, CO.

Shifting the paradigm of how robots and humans can work together requires an open mindset. Short advocates for engineers to recognize the influence of their own life experiences on their engineering decisions. “If engineers aren’t aware that the decisions that they’re making are driven by their perspective, background, values, and experiences, then they end up thinking that the decision that they made was somehow objectively perfect, when in fact they are actually baking this particular perspective into what they’re doing,” she says.

Short continues to challenge assumptions about disability and technology and work towards a future in which technology is designed with fluidity in mind. She regularly collaborates with fellow Tufts faculty members including Karol Family Applied Technology Professor Matthias Scheutz and Assistant Professor Jivko Sinapov, both of the Department of Computer Science, as well as Assistant Professor Trevion Henderson and John R. Beaver Professor Chris Rogers, both of the Department of Mechanical Engineering.

Studying the relationship between humans and robots

Short embeds her values in her research methods by testing her robots in real-world situations to include a more diverse range of users and more natural interaction patterns than a traditional lab setting. For example, researchers in Short’s lab recently deployed a robotic arm in the lobby of the Joyce Cummings Center on Tufts’ Medford/Somerville campus. The arm used a standard algorithm for assistive devices, but tried to “help” the user towards the wrong goal, to test users’ ability to identify and correct issues with the robot’s performance.

The researchers found that, by and large, users noticed the robots’ shortcomings and adapted to the situation to achieve the objective they wanted, despite the faulty behavior. “They’re not just passively letting the assistance happen, but they’re actually actively reasoning about it and trying to make things happen,” said Short. Reframing the relationship between humans and robots to value human users’ participation in robotic functioning could lead to powerful results, Short says. “If we treat users as being intentional and smart, reasoning about the way the system works and trying to optimize for the whole system, what more could we do with that?”

Learn more about Clare Boothe Luce Assistant Professor Elaine Short.

Department:

Computer Science