Mobile sensor systems

By Joel Lima, E21

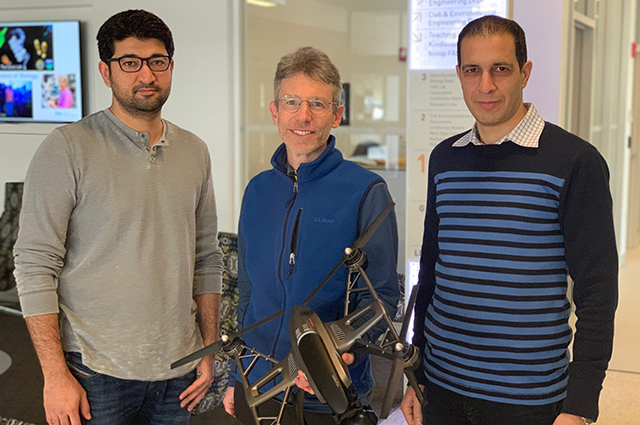

Associate Professors Babak Moaveni (Department of Civil and Environmental Engineering), Jason Rife (Department of Mechanical Engineering), and Usman Khan (Department of Electrical and Computer Engineering) were recently awarded a grant from the National Science Foundation to study using mobile sensors to characterize structural dynamics. One potential application is to enable flying drones to place sensors precisely, to support rapid identification of damage in a building after an earthquake.

The field of automated structural health monitoring is evolving as an alternative to complement traditional visual inspection by structural engineers. In concept, smart sensor networks might be deployed on new infrastructure, such as buildings, bridges, or wind turbines, to provide a real-time diagnosis of structural health. To date, proposals for such smart sensor networks have focused on fixed sensors, like accelerometers, that would be rigidly attached to the structure of interest.

The approach of the Tufts team is unique in that it will examine the potential benefits of mobile sensors for analyzing structural health. If sensors can be placed in specific locations by robots, retrieved, and then placed in new locations, it may be possible to localize damage much more precisely and much more quickly than would be possible with fixed sensors. The team plans to use Bayesian inference and reinforcement learning to determine the best sensor locations and to re-evaluate those positions continually as new batches of data become available. Figure 1 illustrates the proposed approach.

[[{"fid":"1994","view_mode":"default","fields":{"format":"default","field_file_image_alt_text[und][0][value]":"Flowchart showing optimization of sensor placement -> automated data collection -> sequential system identification, all feeding into each other in a loop.","field_file_image_title_text[und][0][value]":false},"type":"media","field_deltas":{"1":{"format":"default","field_file_image_alt_text[und][0][value]":"Flowchart showing optimization of sensor placement -> automated data collection -> sequential system identification, all feeding into each other in a loop.","field_file_image_title_text[und][0][value]":false}},"attributes":{"alt":"Flowchart showing optimization of sensor placement -> automated data collection -> sequential system identification, all feeding into each other in a loop.","class":"media-element file-default","data-delta":"1"}}]]

Fig. 1 (a) Key steps in proposed approach, and (b) iterative deployment of sensors for high-resolution identification.

This research is funded by the National Science Foundation. Learn more.

Department:

Civil and Environmental Engineering